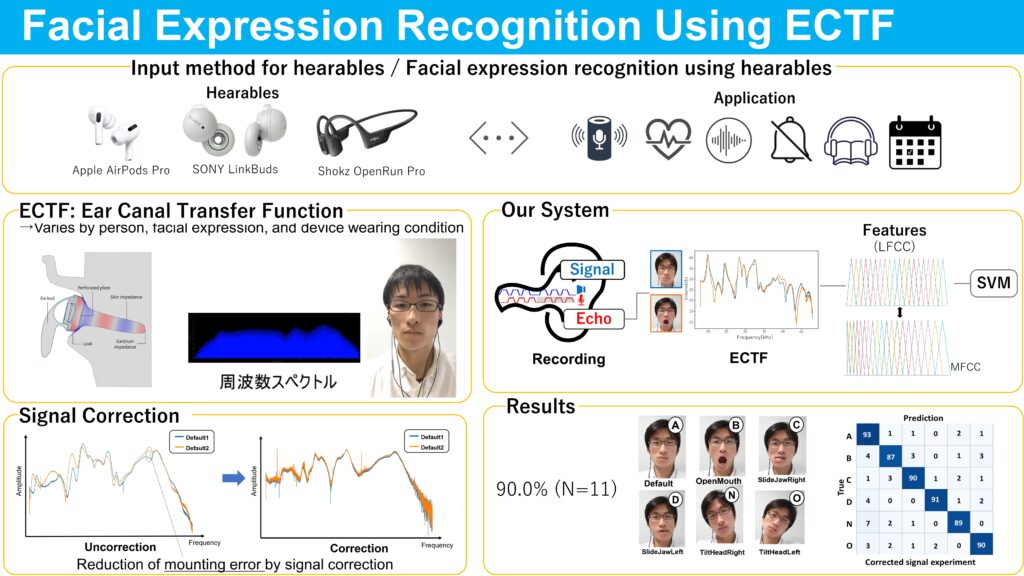

In this study, we propose a new input method for mobile and wearable computing using facial expressions. Facial muscle movements induce physical deformation in the ear canal. Our system utilizes such characteristics and estimates facial expressions using the ear canal transfer function (ECTF). Herein, a user puts on earphones with an equipped microphone that can record an internal sound of the ear canal. The system transmits ultrasonic band-limited swept sine signals and acquires the ECTF by analyzing the response. An important novelty feature of our method is that it is easy to incorporate into a product because the speaker and the microphone are equipped with many hearables, which is technically advanced electronic in-ear-device designed for multiple purposes. We investigated the performance of our proposed method for 21 facial expressions with 11 participants. Moreover, we proposed a signal correction method that reduces positional errors caused by attaching/detaching the device. The evaluation results confirmed that the f-score was 40.2% for the uncorrected signal method and 62.5% for the corrected signal method. We also investigated the practical performance of six facial expressions and confirmed that the f-score was 74.4% for the uncorrected signal method and 90.0% for the corrected signal method. We found the ECTF can be used for recognizing facial expressions with high accuracy equivalent to other related work.

Movie

Overall

Publication

Takashi Amesaka, Hiroki Watanabe, and Masanori Sugimoto. Facial Expression Recognition Using Ear Canal Transfer Function, In Proceedings of ISWC 2019, London, UK, pp.1-9, 2019. [ACM]